31-10-2023 (WASHINGTON) In an effort to mitigate the risks associated with artificial intelligence (AI), President Joe Biden issued a new executive order on Monday, October 30. The order aims to safeguard consumers, workers, minority groups, and national security concerns in the rapidly evolving landscape of AI technology.

The executive order mandates that developers of AI systems with potential risks to US national security, the economy, public health, or safety must share the results of their safety tests with the US government, in accordance with the Defense Production Act, before releasing their products to the public.

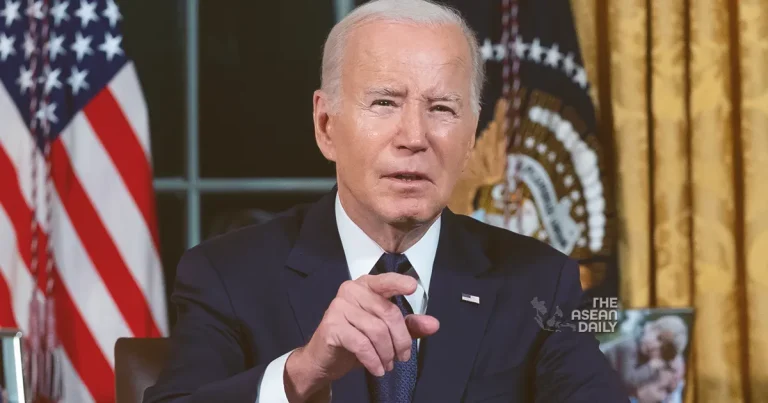

President Biden, who signed the order at the White House, emphasized the need to govern AI technology, stating, “To realise the promise of AI and avoid the risk, we need to govern this technology. In the wrong hands, AI can make it easier for hackers to exploit vulnerabilities in the software that makes our society run.”

This move represents the latest action by the Biden administration to set regulatory boundaries around AI, which has been making significant advancements in capability and popularity in an environment with relatively limited regulation thus far. The order, however, has garnered mixed reactions from industry and trade groups.

Bradley Tusk, CEO of Tusk Ventures, a venture capital firm with investments in tech and AI, welcomed the move but expressed concerns that tech companies may hesitate to share proprietary data with the government due to fears it could be disclosed to competitors. He pointed out that without a robust enforcement mechanism, the effectiveness of the executive order might be limited.

NetChoice, a national trade association comprising major tech platforms, criticized the order as an “AI Red Tape Wishlist” that could stifle innovation and expand federal government control over American innovation by deterring new companies and competitors from entering the marketplace.

The new executive order goes beyond the voluntary commitments made earlier in the year by AI companies such as OpenAI, Alphabet, and Meta Platforms, which had pledged to watermark AI-generated content to enhance technology safety.

As part of the order, the Commerce Department will develop guidance for content authentication and watermarking to label items generated by AI, ensuring clarity in government communications.

The order also establishes requirements for intellectual property regulators and federal law enforcement agencies to address the use of copyrighted works in AI training, including evaluating AI systems for potential violations of intellectual property law.

Numerous writers and visual artists have filed lawsuits accusing tech companies of stealing their works to train generative AI systems, while tech companies argue that their use of the content falls under the fair-use doctrine of US copyright law.

Additionally, the Group of Seven (G7) industrial countries announced a code of conduct for companies involved in the development of advanced AI systems.

Some experts have pointed out that the United States lags behind Europe in AI regulation and have called for laws with substantial regulatory measures. A senior administration official rejected the criticism, noting that legislative action was also required, and President Biden called on Congress to take action, particularly in protecting personal data.

US Senate Majority Leader Chuck Schumer expressed his intention to have AI legislation prepared within a few months. US officials have raised concerns about AI’s potential to exacerbate bias and civil rights violations, and President Biden’s executive order seeks to address these issues by issuing guidance to various entities to prevent AI algorithms from intensifying discrimination. The order also calls for best practices to address the potential negative impacts of AI on the workforce, including job displacement, and requires a report on the labor market consequences of AI technology.