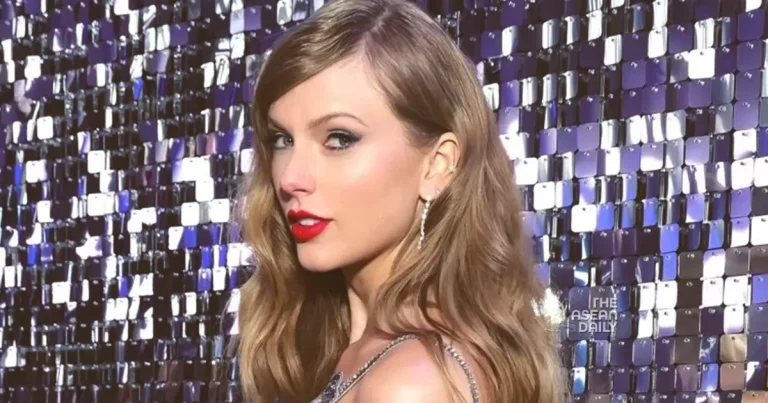

27-1-2024 (WASHINGTON) Fans of Taylor Swift and politicians, including the White House, expressed outrage on January 26 at AI-generated fake pornographic images of the megastar that circulated widely on X and other platforms.

One image of the US megastar amassed 47 million views on X, the former Twitter, before being taken down on January 25. According to US media reports, the post remained live for approximately 17 hours.

“It is alarming,” stated White House Press Secretary Karine Jean-Pierre when asked about the images.

“Sadly, we know that lack of enforcement (by the tech platforms) disproportionately impacts women and girls, who are the primary targets of online harassment,” Ms. Jean-Pierre added.

While deepfake pornographic images of celebrities are not new, activists and regulators are concerned that easily accessible tools employing generative artificial intelligence (AI) will lead to an uncontrollable surge of harmful content.

Non-celebrities are also falling victim, with increasing reports of young women and teens facing harassment on social media through sexually explicit deepfakes that are becoming more realistic and easier to create.

The targeting of Swift, the second most listened-to artist in the world on Spotify (just behind Canadian rapper Drake), could shed new light on the phenomenon, given her vast fan base’s outrage.

In 2023, Swift used her fame to encourage her 280 million Instagram followers to vote. Her fans also pressured the US Congress to hold hearings about Ticketmaster’s mishandling of the sale of their hero’s concert tickets in late 2022.

“The only ‘silver lining’ about it happening to Taylor Swift is that she likely has enough power to get legislation passed to eliminate it. You people are sick,” wrote influencer Danisha Carter on X.

X is among the largest platforms for pornographic content globally, analysts say, as its policies on nudity are less stringent than Meta-owned platforms Facebook or Instagram.

This leniency has been tolerated by Apple and Google, the gatekeepers for online content through the guidelines they establish for their app stores on iPhones and Android smartphones.

In a statement, X asserted that “posting Non-Consensual Nudity (NCN) images is strictly prohibited on X, and we have a zero-tolerance policy towards such content.” The platform stated that it was “actively removing all identified images and taking appropriate actions against the accounts responsible for posting them.”

However, the images continued to be available and shared on Telegram.

Representatives for Swift did not respond to requests for comment. The star has also been the subject of right-wing conspiracy theories and fake videos falsely depicting her promoting high-priced cookware from France.

“What’s happened to Taylor Swift is nothing new. For years, women have been targets of deepfakes without their consent,” said Ms. Yvette Clarke, a Democratic congresswoman from New York who supports legislation to combat deepfake porn.

“And with advancements in AI, creating deepfakes is easier & cheaper,” she added.

Mr. Tom Kean, a Republican congressman, warned that “AI technology is advancing faster than the necessary guardrails. Whether the victim is Taylor Swift or any young person across our country, we need to establish safeguards to combat this alarming trend.”

Implementing legally mandated controls would require the passage of federal laws, a prospect that remains uncertain in a deeply divided US Congress.

US law currently provides tech platforms with broad protection from liability for content posted on their sites, and content moderation is voluntary or implicitly enforced by advertisers or app stores.

Numerous well-publicized cases of deepfake audio and video have targeted politicians or celebrities, with women being the primary targets through sexually explicit images easily accessible on the Internet. Software to create such images is widely available online.

Research cited by Wired magazine indicates that 113,000 deepfake videos were uploaded to the most popular porn websites in the first nine months of 2023. Additionally, a startup’s research in 2019 found that 96 per cent of deepfake videos on the Internet were pornographic.