25-10-2023 (NEW YORK) The proliferation of child sexual abuse images on the internet could worsen if measures are not taken to control artificial intelligence (AI) tools that generate deepfake photos, according to a new report by the United Kingdom-based Internet Watch Foundation (IWF). The report calls on governments and technology providers to act swiftly before a flood of AI-generated images overwhelms law enforcement investigators and increases the number of potential victims.

Dan Sexton, the chief technology officer of the watchdog group, emphasized the urgent need to address the issue, stating that the harm is already happening. The report highlighted a case in South Korea where a man was sentenced to 2.5 years in prison for using AI to create virtual child abuse images.

The report also revealed instances of children using these AI tools on each other. In one incident, police in southwestern Spain investigated allegations of teenagers using a phone app to manipulate photos of their schoolmates to appear nude.

The rise of generative AI systems, which allow users to describe what they want to produce, has a dark side. If not stopped, the flood of deepfake child sexual abuse images could overwhelm investigators, leading to rescuing virtual characters instead of real victims. Perpetrators could also exploit these images to groom and coerce new victims.

The IWF found faces of famous children online and observed a significant demand for the creation of more images using existing content of previously abused victims. This alarming trend shocked the organization.

The charity organization, focused on combating online child sexual abuse, began receiving reports about abusive AI-generated imagery earlier this year. This led them to investigate forums on the dark web, where abusers shared tips and marveled at how easily they could generate explicit images of children. The content being shared is becoming increasingly lifelike.

While the IWF report highlights the growing problem, it also calls on governments to strengthen laws to combat AI-generated abuse. The report particularly targets the European Union, where there is an ongoing debate over surveillance measures that could automatically scan messaging apps for suspected images of child sexual abuse.

The IWF’s work also aims to prevent the re-victimization of previous abuse victims through the redistribution of their photos. The report suggests that technology providers could do more to make it harder for their products to be used in this manner. However, some of the tools are challenging to control.

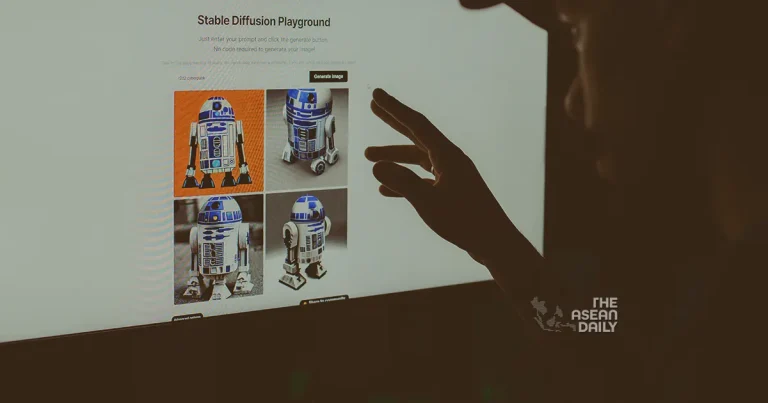

Some AI models, such as OpenAI’s image-generator DALL-E, have been successful at blocking misuse because they have full control over how they’re trained and used. On the other hand, an open-source tool called Stable Diffusion, developed by London-based startup Stability AI, has become favored by producers of child sex abuse imagery. Although Stability AI has implemented filters to block unsafe and inappropriate content and prohibits illegal uses, older unfiltered versions of the tool are still accessible and frequently used for creating explicit content involving children.

Regulating what people do on their personal computers is challenging, making it necessary to find ways to prevent the use of openly available software for harmful content creation. Multiple countries, including the US and UK, have laws against the production and possession of such images, but enforcement remains a challenge.

The IWF’s report coincides with a global AI safety gathering hosted by the British government, which will be attended by high-profile individuals, including US Vice President Kamala Harris and tech leaders. IWF CEO Susie Hargreaves expressed optimism and emphasized the importance of discussing the darker side of AI technology to raise awareness of the problem.